While graph interaction networks achieve exceptional results in Higgs boson identification, GNN explainer methodology is still in its infancy. To introduce GNN interpretation to the particle physics domain, we apply layerwise relevance propagation (LRP) to our existing Higgs boson interaction network (HIN) to calculate relevance scores and reveal what features, nodes, and connections are most influential in prediction. We call this application HIN-LRP. The synergy between the LRP interpretation and the inherent structure of the HIN is such that HIN-LRP is able to illuminate which particles and particle features in a given jet are most significant in Higgs boson identification. The resulting interpretations are ultimately congruent with extant particle physics theory, with the model demonstrably learning the importance of concepts like the presence of muons, characteristics of secondary decay, and salient features such as impact parameter and momentum.

Intro

Graph neural networks (GNN) are notoriously difficult to interpret, and those employed in the particle physics domain are no different. The interaction network has gained popularity with high energy physicists studying fundamental particles because this graph model achieves a highly competitive accuracy, while still working with relatively simple and unprocessed data. However, both broadly and in the physics domain, it is often not fully understood how or why the graph interaction networks make their classifications. Layerwise Relevance Propagation is the method we've chosen to use to investigate how these models' inner workings might relate to the physical properties of the universe.

The Higgs boson is a particle that was hypothesized in 1964 by Peter Higgs, and is essentially suggested to represent a universal field that is responsible for the property of mass. Big deal, right? But it took decades before it was actually confirmed. Evidence finally appeared in 2012, at the Large Hadron Collider (LHC), where scientists slam particles together really really fast to make other particles, rare and important particles like the Higgs boson. But even with the particle collider constantly firing, a Higgs event is hard to come by. Not only that, these detectors don’t get to pick up a literal Higgs boson, because it exists for only a brief moment in time before decaying. The detector has to look at the decays, which come in the form of particle jets, and deduce whether or not a Higgs boson event was responsible. Fittingly, these particle jets are the basis of our data.

Particle Jet Data

Context on these "jets", and our data. When a spray of particles hit the detector, the particle flight pathways and measurements are reconstructed into what are called tracks, and then grouped under cone-like clusters. But for the purposes of the model, it's good to know that we actually train on simulation data. Why? Because in quantum physics, we can only predict things probabilistically, so there is no way to confidently label real LHC data. We can say “oh, this was probably a Higgs boson,” but there’s no way of really fully knowing.

So instead, the simulation constructs our data on a fully ground up basis, building off of physics rules that have been theorized and confirmed. A benefit of this is that we can also lessen the rarity of the Higgs boson signal, so that it’s actually useful to train this model. In the simulated dataset, every entry is an individual jet, and each jet can have a variable number of particles, from as little as only 2 tracks to more than 60. For each jet we’ve isolated 48 features, features like momentum, mass, angle...ultimately, the goal is to look at a jet, and say whether it came from a Higgs signal, or is simply background.

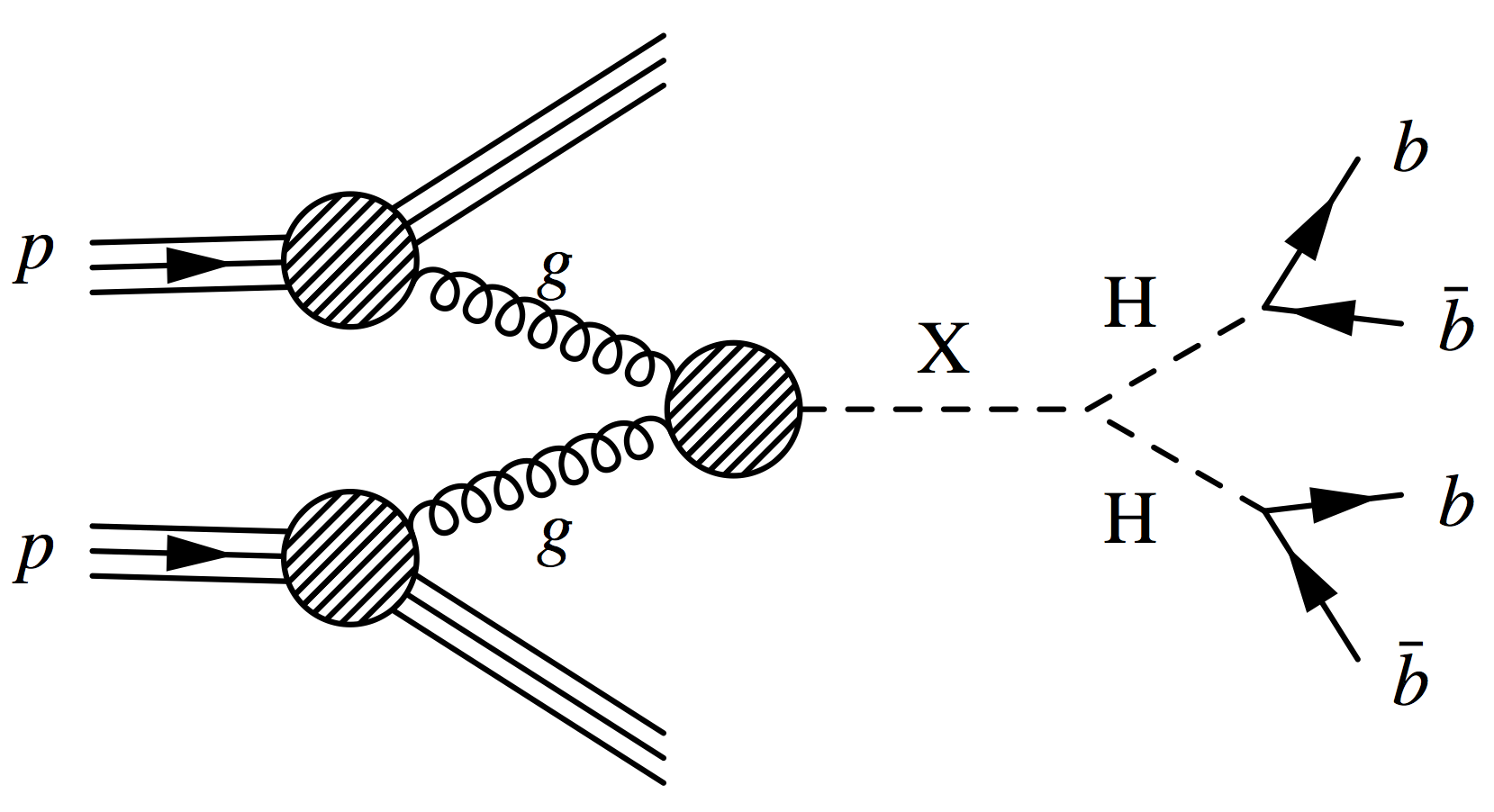

The Signal: H to bb

There is a specific Higgs decay that we are looking for: the Higgs Boson decaying into a pair of b hadrons. There are several other permutations of higgs decay that can occur but H to bb is the most common. For the sake of simulation, we have this heavy artificial particle X, which decays into a pair of Higgs bosons, each of which decay into pairs of b hadrons.

Das, Souvik. "Pair Production Of The Higgs Boson". Home.Fnal.Gov, 2021, https://home.fnal.gov/~souvik/HH/index.html. Accessed 26 Feb 2021.

To make things tougher, a given jet wouldn’t only have b hadrons, it’d also be full of other particles from adjacent and related decays. It’s altogether quite chaotic, which is why the classification problem in question is one suited for machine learning.

The Higgs Boson Interaction Network Model

That brings us to the Higgs Boson Interaction network, or HIN. This deep learning model finds pretty desirable success for the problem of Higgs boson identification. See this paper.

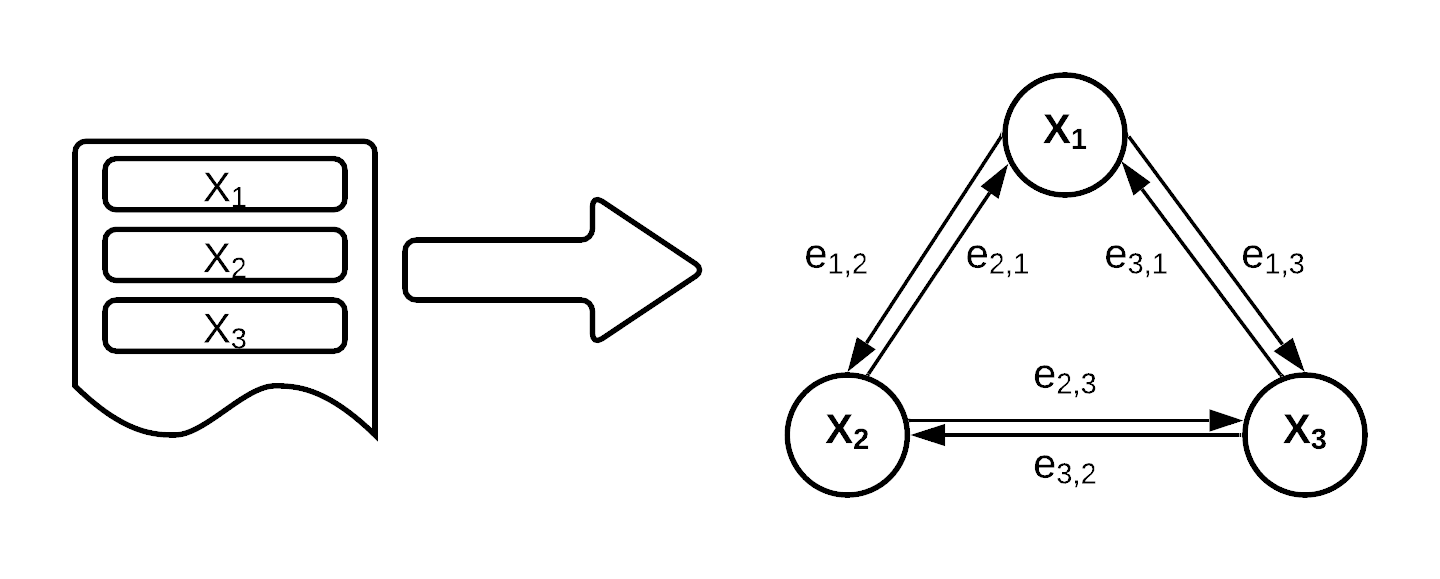

Interactions networks are a kind of Graph Neural Net. As its name suggests, the interaction network excels at modeling the interaction of objects in complex systems. For the inputs, we take the simulated data and regroup it into a complete directed graph where every node is a particle, with the 48 feature values grouped by particle under the node, and every edge represents a directional relationship between particles.

Why do we represent the jets as graphs? Remember: a jet is a cluster of particles, and there is no inherit ordering of those particles. So graph models are desirable in that they reflect the absence of an inherit ordering. Even better, by fully connecting the graph, we are laying a truth for the model to understand. These particles are all connected in some way, they come from the same collisions, primary decays, maybe even the same secondary decays. It is then just up to model training to decide how connected they are and how important those connections are to determining whether it is a Higgs signal.

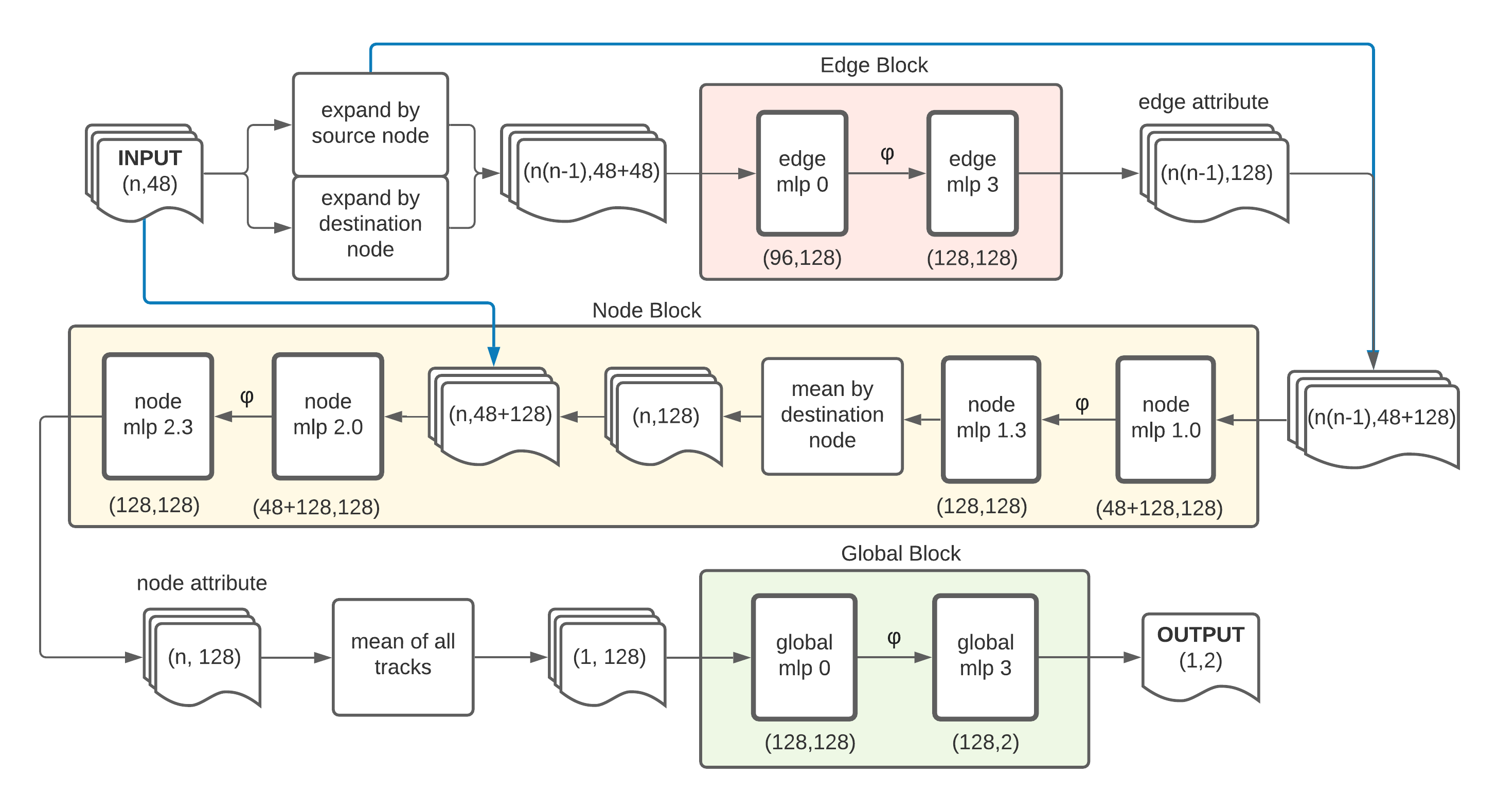

We built the model in PyTorch Geometric, which really helps facilitate a lot of the graph connections and the training structure. The core part of the HIN is the interaction block. The interconnectedness of this interaction block allows us to model the flow of information between nodes, or pairs of particles. PyTorch Geometric’s MetaLayer function abstracts a lot of this interconnectedness for us. Note how when the new edge hidden features are calculated, they influence the new node hidden features and global hidden features, and likewise when new node features are calculated, and so on.

Each block is encoded with its respective transformation sequences: concatenations, linear transformations, batch normalizations, and ReLU activations. Our fully trained model will pass the input data through these blocks and return a softmaxed probability of how likely a jet is to be a Higgs boson signal.

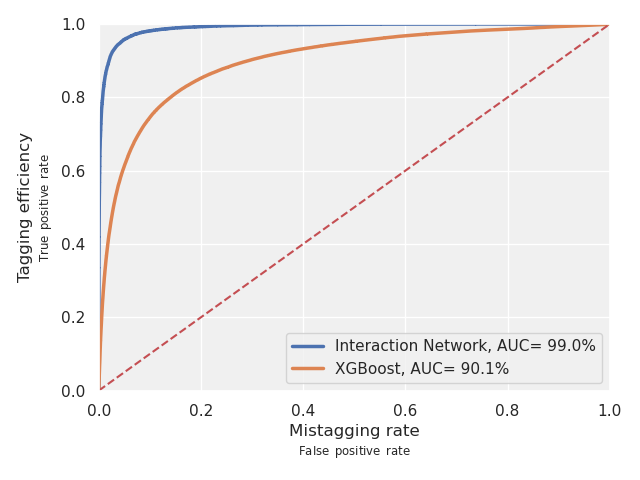

But most importantly here’s how it performs: a lovely 99% ROC AUC! Compared to a straightforward boosted decision tree, our interaction network AUC boundary is nearly a right angle, which is quite ideal!

So we have this model, with a pretty splendid accuracy. But we don’t automatically understand how or why the model comes to these conclusions. This is where LRP comes in:

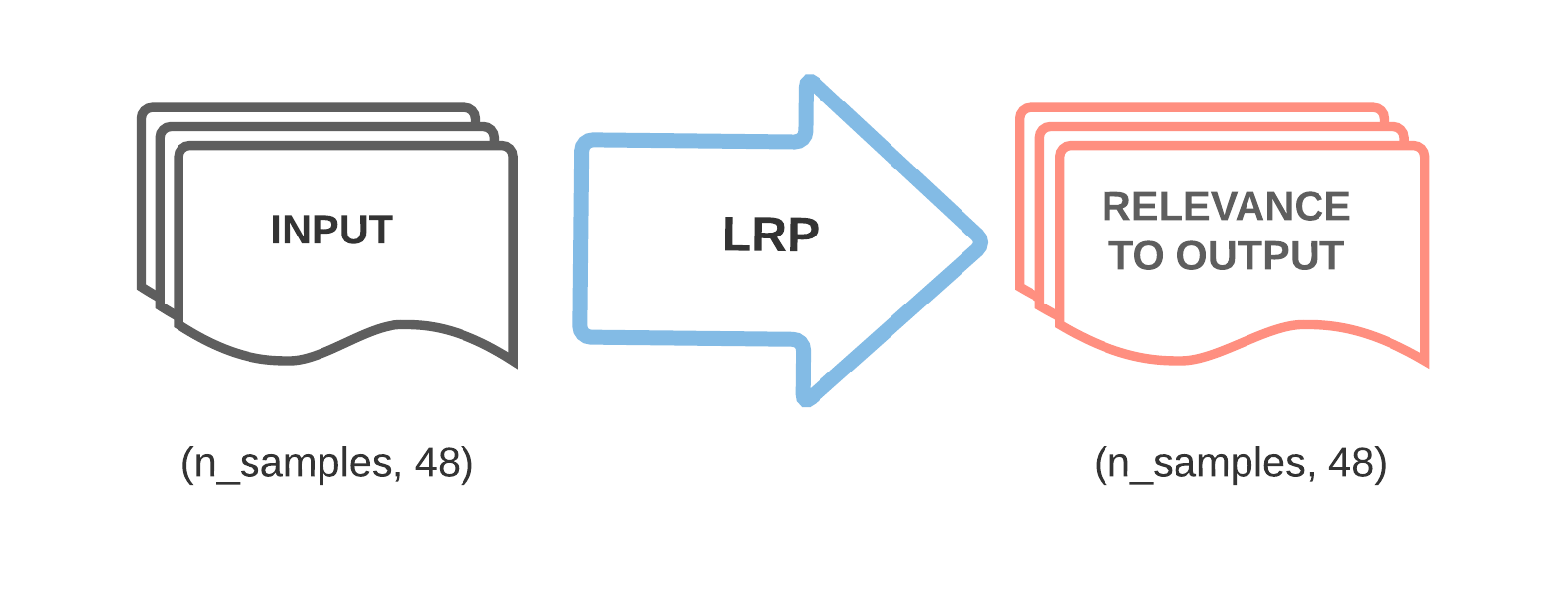

Layerwise Relevance Propagation

LRP is a technique that has found use in interpreting several other neural networks, particularly image convolutional neural networks. More recently, its potential for GNN explanation is being discovered. Like water in a forking river, we branch across the model pathways, distributing relevance scores throughout each layer, all the way back to the inputs. In simple words, we want to use LRP to find out how relevant each part of the input is to the output. So we take our input matrix, use some LRP magic, and for every value in the input, we get a corresponding score telling us how relevant it is the the output.

For our model, this means that LRP allows us to interpret model behavior on a jet by jet basis. Recall that the IN takes a jet as input, and outputs a Higgs boson likelihood for that jet. Here, we will reverse this process using LRP: input the Higgs boson likelihood, and in return we find out which particles, edges, even features of that jet input were most relevant.

Now, you might have realized that LRP is very similar to a regular back-propagation of the model, except that it is propagating the relevance score. It operates on a gradient times input—essentially a Taylor expansion based calculation used to get relevancy at each layer.

"Heatmapping.Org". Heatmapping.Org, 2021, http://www.heatmapping.org/. Accessed 4 Mar 2021.

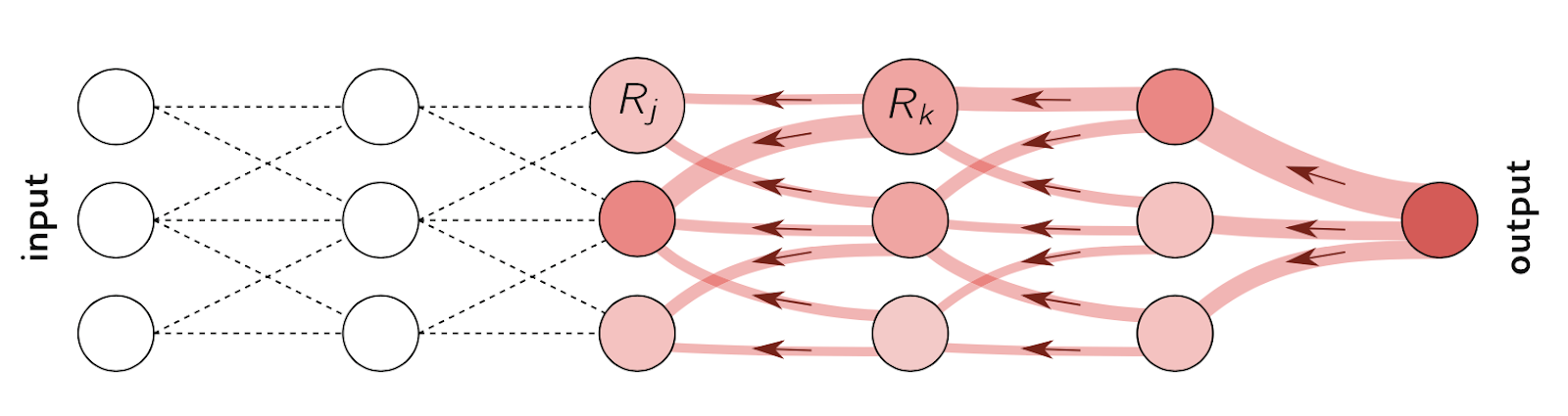

Look at this diagram. Follow the color density from the output as it moves backwards through the nodes. The more “relevant” nodes get more weight, and therefore color. For our model, this will allow us to highlight relevancy for both nodes, and edges.

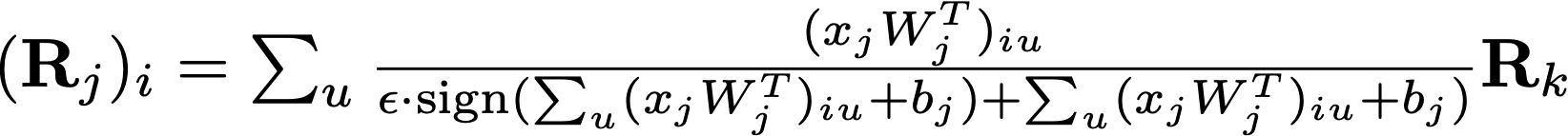

Now for the equation we’re using to propagate relevance: The LRP-ε rule. The complicated math equation defined above is used at each layer to propagate relevance through the model. The rule is derived from the gradient times input concept, with the addition of one more element: The ε refers to a small quantity added to the denominator. With this, we avoid numerical instabilities, and also absorb some of the relevance score such that only the most important features are highlighted.

For the more mathematically inclined, see the LRP page for more a more in depth breakdown.

Dummy Model

To test our LRP, we made fake data, and labeled it using an equation such that the only important features were 0 and 3. Everything else is useless noise.

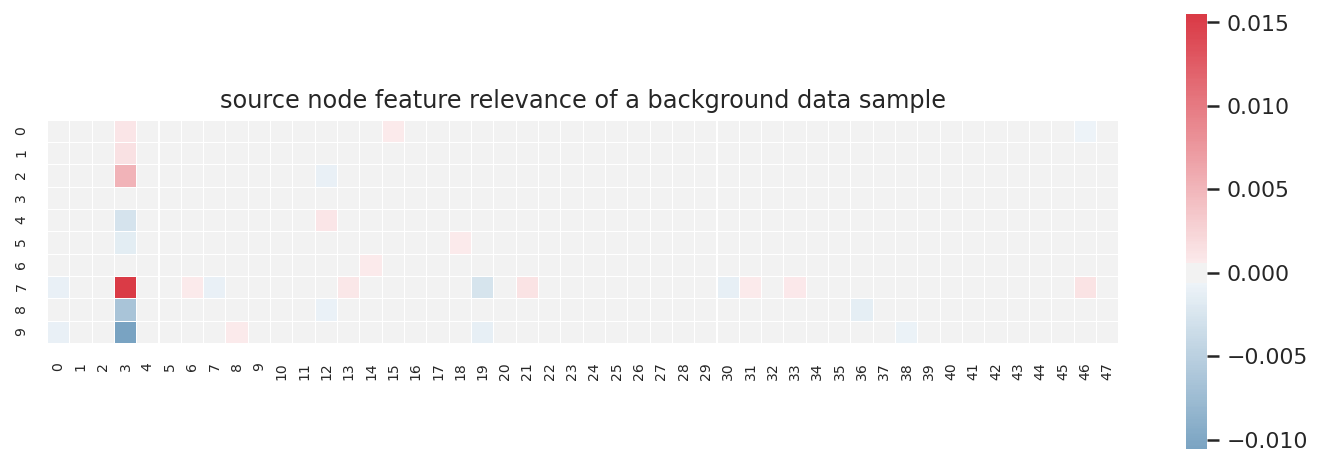

How do we read this map? The relevance scores are visualized in this heatmap, which has the same dimensions as the input. Each cell in the heatmap corresponds to an entry in the raw input, and it lights up if the entry is relevant to the final prediction. Positive or negative, the brighter the color, the more relevant it is to the prediction. The columns correspond to the 48 features whereas the rows correspond to the 10 tracks in the dummy jet.

So clearly feature 3 is aggressively activated. You may be wondering why feature 0 is blank. We actually saw this result and circled back to our label equation, realizing that even though both feature 0 and 3 are in the equation, only feature 3 mattered to the label. And LRP realized that before we did! So it’s quite funny how this slight error in the construction of our sanity check actually exposed a deeper truth about the dummy data, which honestly just makes us more confident in the validity of the method.

Visualizing Relevance with HIN-LRP

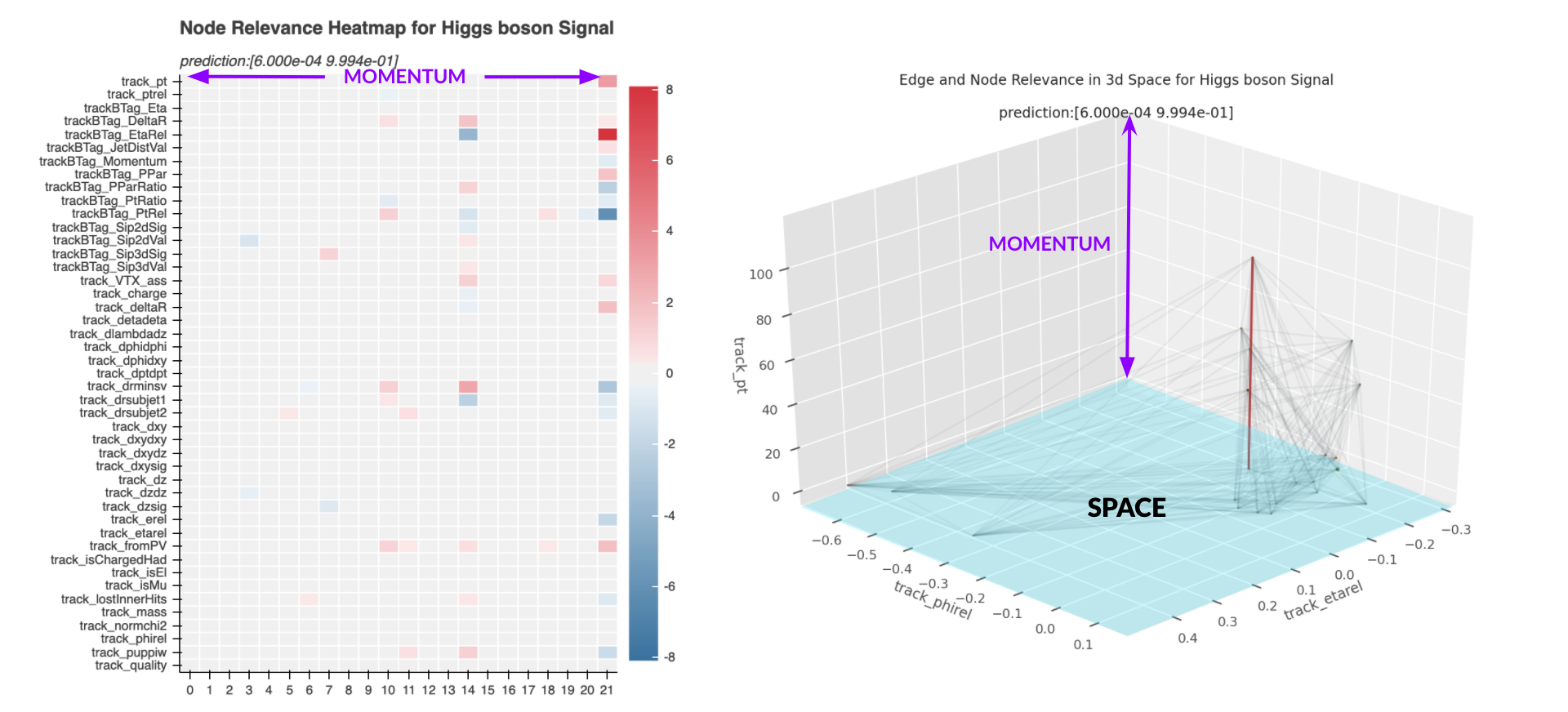

We’ll be looking at four example jets and diving into how LRP interprets each. For each jet, we’ve created a pair of plots to visualize what our model sees for each jet, and what possibly led to the prediction made.

The heatmap on the left looks familiar right? It’s mostly the same as the one we showed with the dummy input example. Here, the heatmap is transposed to give an easier view of the 48 features. Each row corresponds to one feature, and each column now corresponds to a track in the jet, sorted by the top row track_pt, aka transverse momentum, in ascending order. Transverse momentum is known to be an important indicator of whether the jet is associated with Higgs boson. The more intense the color in a cell the more relevant that entry of the input is to the Higgs probability output. Note that the signs of the relevance scores don’t correspond to positive or negative Higgs signal labeling. Only the magnitude matters.

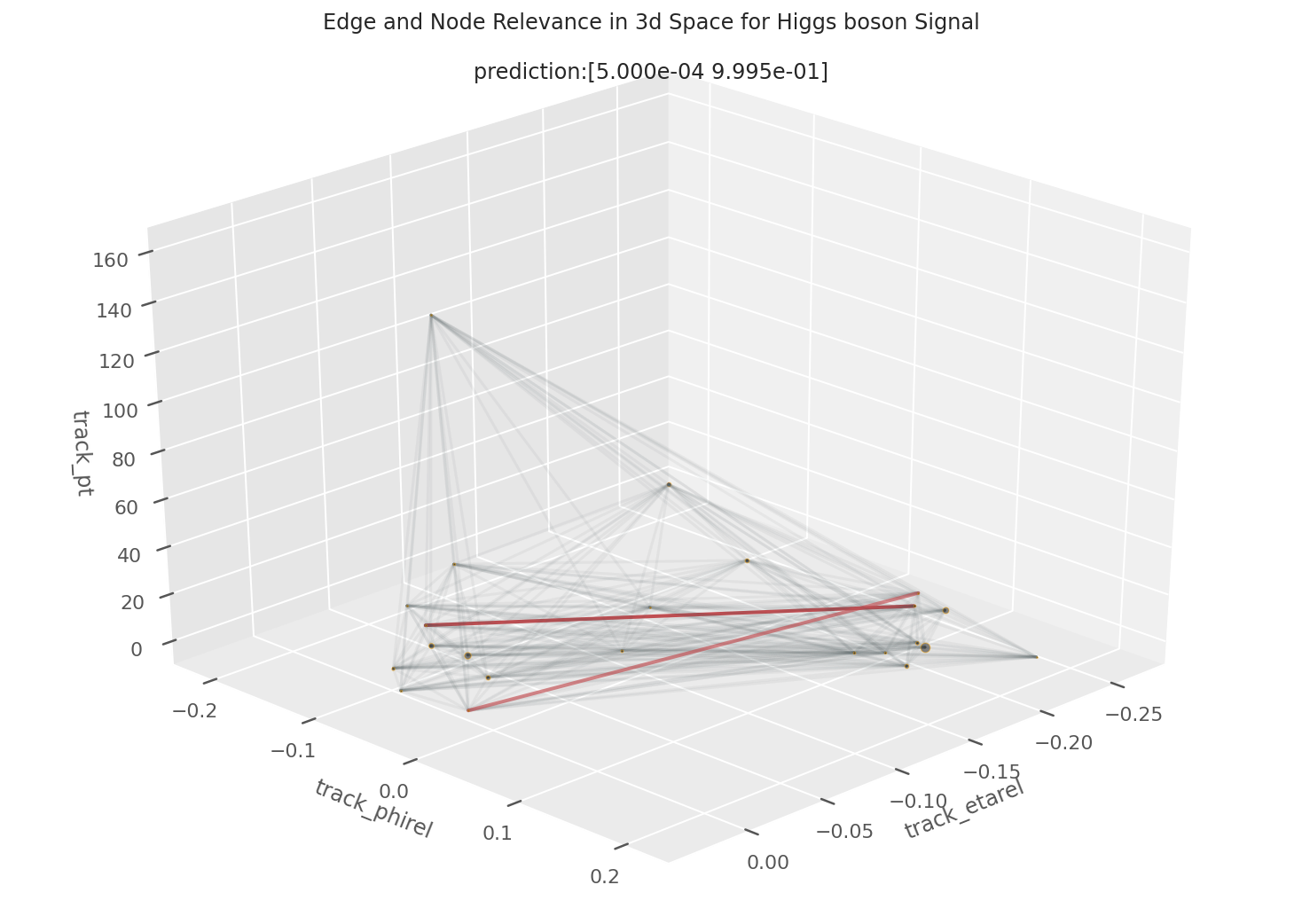

To give a better idea of what our model sees in a jet, we also visualize the significance of the nodes and edges in 3D space, as shown on the right. The 3D network plot projects "polaresque" coordinates φ and η (track_phirel, track_etarel) on the xy plane, and sets the z axis to momentum (again), allowing a clearer view of the dense graph representation of the jet, as well as easy comparison to the heatmap. The sizes of the nodes are determined by node relevance scores, and the edge coloring is determined by their significance in the prediction. The edge significance is a positive, calculated value defined intuitively by the amount of information that flow through it. We can elaborate on the mathematical definition later if you’re interested. The edges receive a positive value between 0 to 1, and in the 3D network plot, the most important edges are highlighted in red.

Let's look at some examples, where the heatmap is interactive so we can see feature values. The library of feature definitions can be found here.

EXAMPLE 1: 99.94% Higgs Boson Signal

In this example, the HIN is more than 99.9% confident in its prediction. The colored cells are mostly distributed in columns 14 and 21, suggesting that those two particles are most relevant to the prediction.

The Higgs boson decays into two b hadrons, right? Our heatmap implies that the model can perceive the two pronged nature of Higgs bosons decaying into the b hadron pair. Additionally, note how the highest momentum node is the most important, and in the 3D space we see the edge connecting to this node to be highly activated. Physicists have shown that Higgs boson decay products are likely to have larger transverse momentum values.

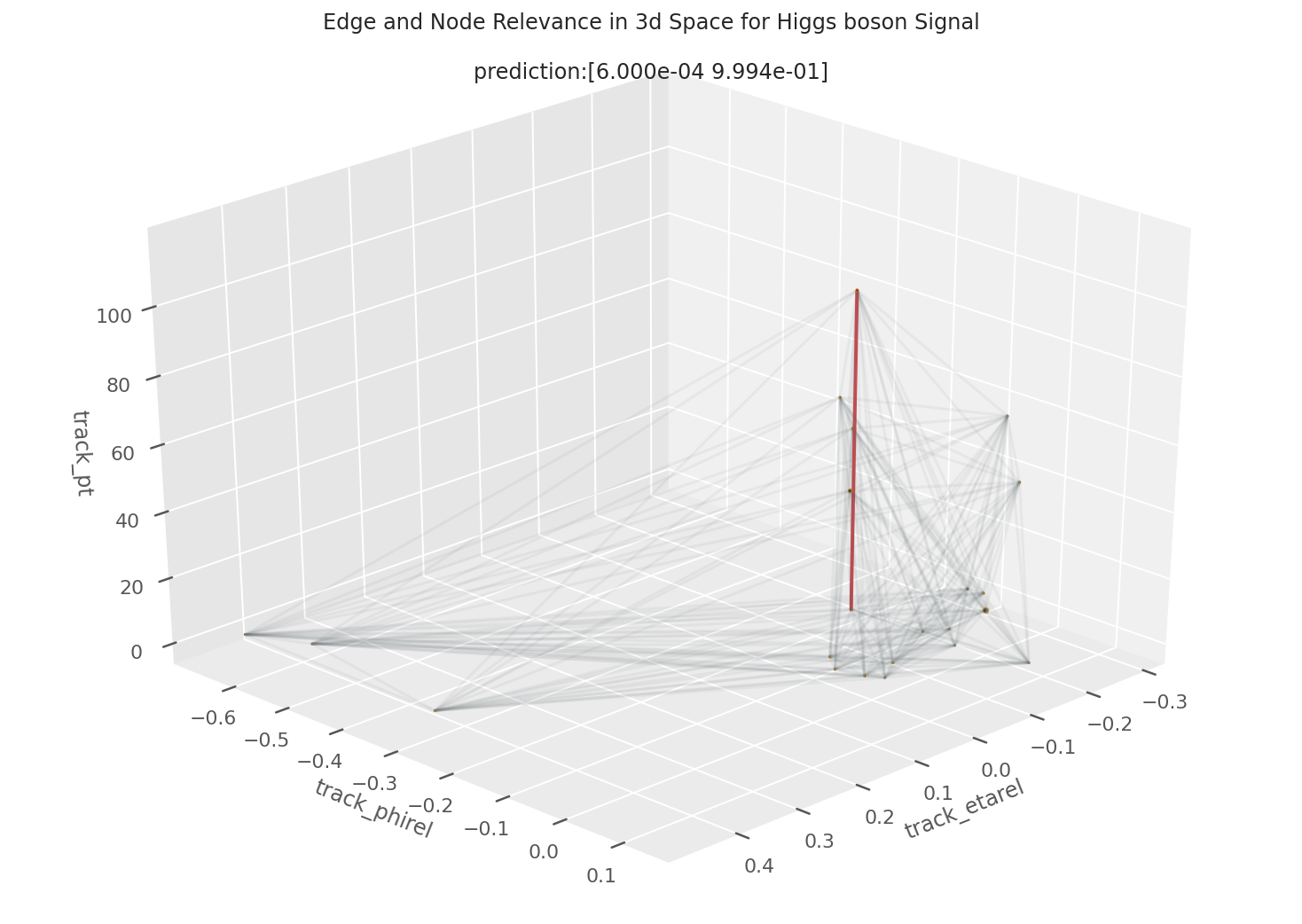

EXAMPLE 2: 99.93% Higgs Boson Signal

Here’s another example output of our HIN-LRP. Like in the previous example, the model is very confident that this jet is a Higgs boson signal. Compared to the heatmap in the previous example, this one displays an even more extreme relevance focus along just two columns, again alluding to the two pronged decay into b hadrons.

Those two particles are the most significant nodes in the prediction, something that is clearly affirmed in corresponding 3D plot on the right. Notably, it is the lower momentum tracks in this jet that are more responsible for classification, but even in this situation momentum itself is still a highly relevant feature, as you can see in the blue cell in particle 1. This time the HIN also made its prediction with positional information in addition to the momentum data in feature trackBTag_PtRel, highlighted in blue. The bright red cells at trackBTag_EtaRel relates to the track’s location in space; track_VTX_ass and track_fromPV are related to its closeness to the primary vertex.

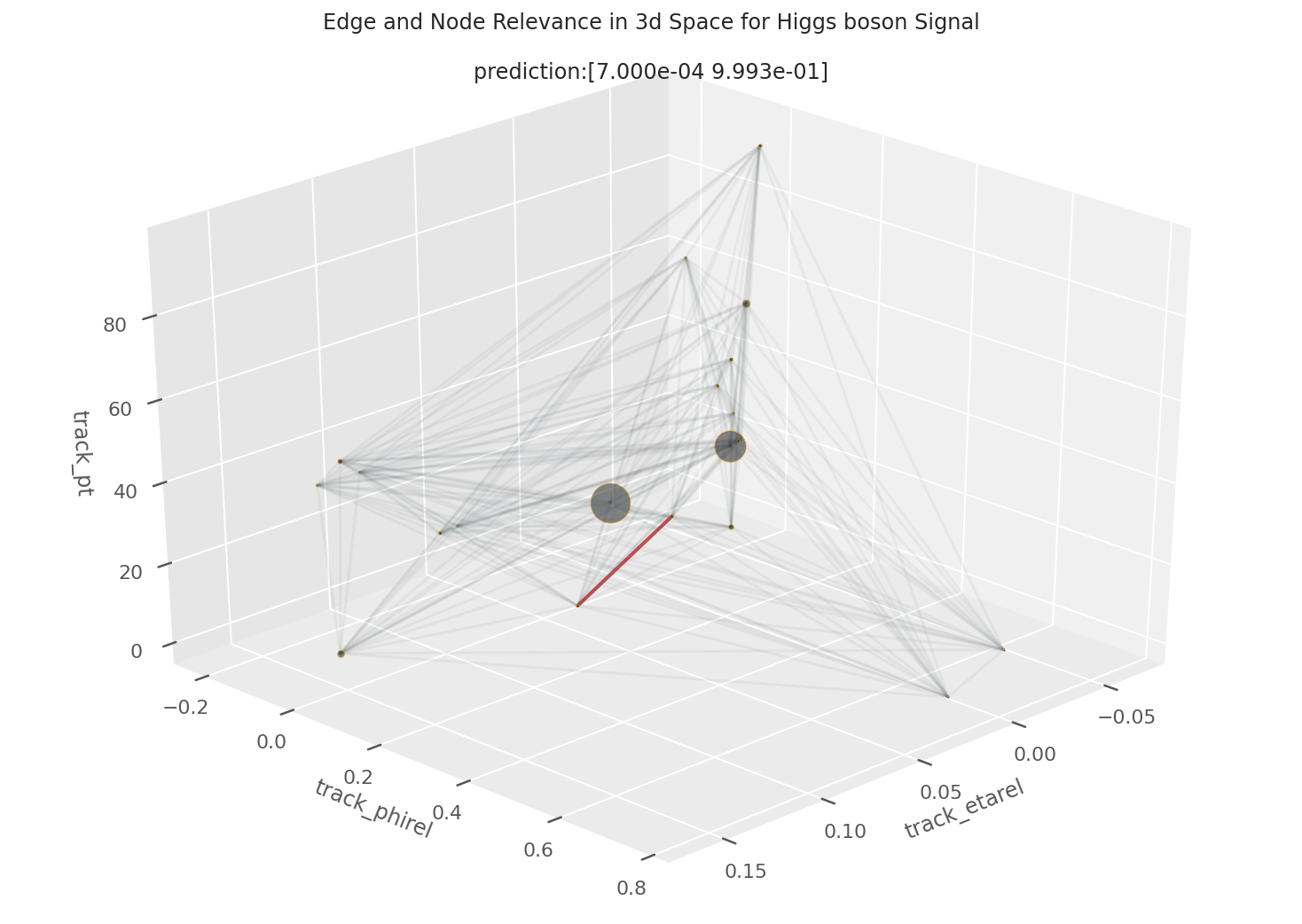

EXAMPLE 3: 99.88% Higgs Boson Signal

This higgs boson jet is one of the first to get us excited about HIN-LRP’s ability to reveal the model’s relationship to physics.

If we draw our attention to the feature track_isMu, aka “is this particle a muon”, we'd see that track 19 is indeed a muon. As that super blue square indicates, the muon track is extremely important information when it comes to the model’s classification. This is great because it reflects real physics phenomena: muons are actually relatively common outputs of b hadron decay. And if b hadrons are decaying, there’s a good chance those b hadrons were descended from a Higgs boson signal. It’s promising that our model has gleaned the importance of the muon, and shows that the HIN is able to learn physics rules from the chaotic collision events, and that LRP is able to illuminate the HIN’s understanding in this manner.

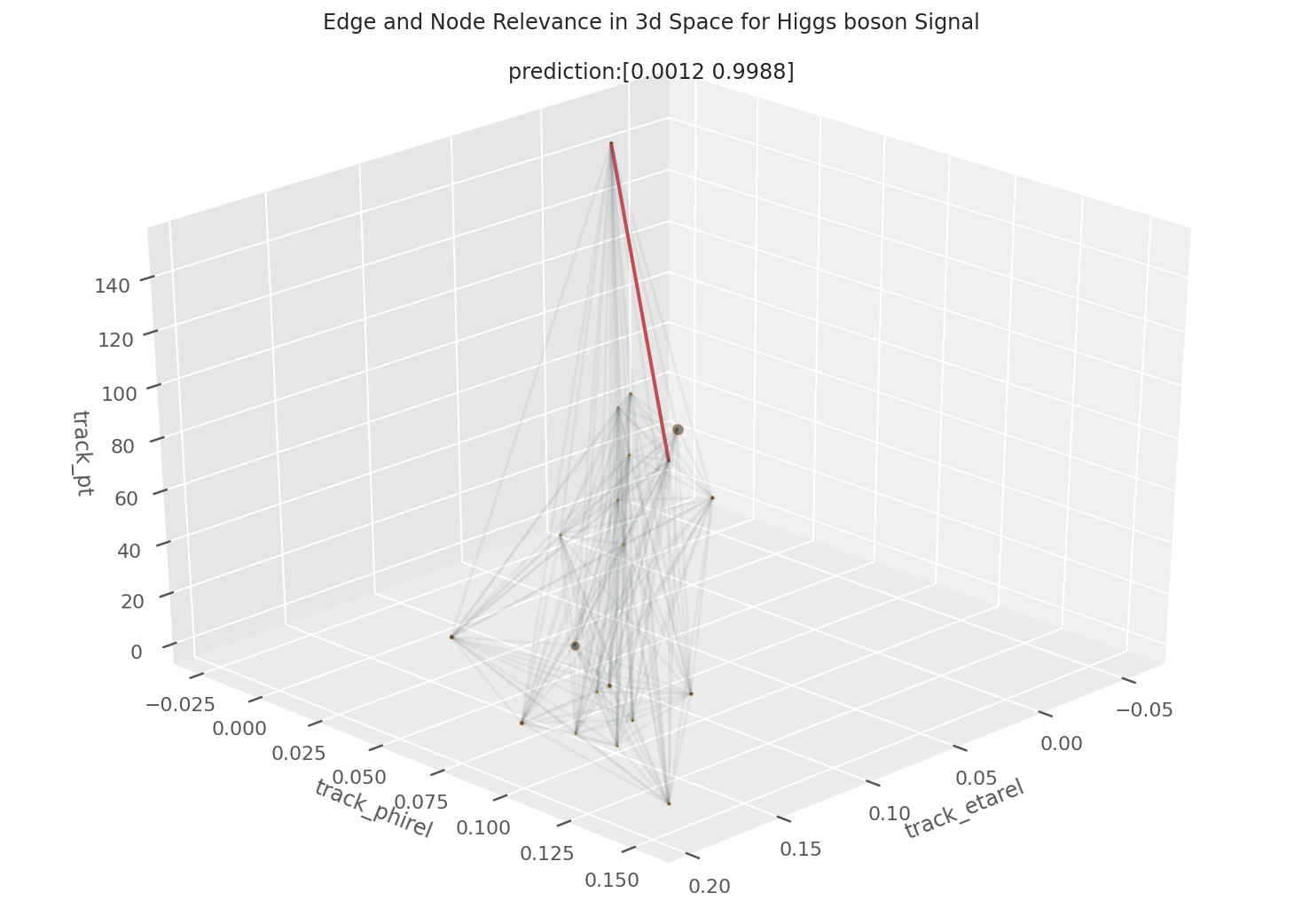

EXAMPLE 4: 99.95% Higgs Boson Signal

In this last example, the heatmap conveys two core pieces information about our model’s decision.

In track 19, many cells are highly activated, which indicates that the model utilized a host of positional information like momentum from feature track_pt, angle from the jet axis from track_deltaR, track_etarel, and angle from the secondary vertex track_drminsv. Alongside this positional information in track 19 is the boolean for feature track_puppiw, which indicates whether the track is "pileup-like", or more colloquially, an intrusive track from a separate jet decay. This indicates that our model understands that all that other positional information is only helpful given that this track is actually native to this jet.

The other important cell is the bright red cell in track 18 under track_isEl, or "track is electron". Electrons are leptons, like muons, and also emerge in the decay of b hadrons at a significant rate. So, this particle feature, together with all the other information from track 19, is a strong indicator of the jet being related to a Higgs boson when present.

Conclusion

We presented a specific way to interpret the inner workings of the Higgs boson interaction network through layerwise relevance propagation, and have found that our methodology does, to a significant extent, reflect the foundations of theoretical physics that the model is trained on.

This is not the only way to visualize the outputs of layerwise relevance propagation, this is not the only way to implement layerwise relevance propagation, and LRP is not even the only way to approach interpreting this type of model. What this is, however, is one of the first forays into utilizing GNN interpretation in the particle physics domain.

We can now successfully understand how the HIN makes decisions on a jet by jet level in a way that was not explored before. As GNN explanation is constantly innovating, the tactics we have outlined can be further refined alongside the latest methodologies—varied LRP rules, generalized heuristics, relevant walks—to reach an even deeper understanding of how deep learning comes to understand the laws of physics.

Photograph from HDWallsbox.